Abstract

Vision transformers (ViTs) have pushed the state-of-the-art for visual perception tasks. The self-attention mechanism underpinning the strength of ViTs has a quadratic complexity in both computation and memory usage. This motivates the development of approximating the self-attention at linear complexity. However, an in-depth analysis in this work reveals that existing methods are either theoretically flawed or empirically ineffective for visual recognition. We identify that their limitations are rooted in the inheritance of softmax based self-attention during approximations, that is, normalizing the scaled dot-product between token feature vectors using the softmax function. As preserving the softmax operation challenges any subsequent linearization efforts. By this insight, a family of SOftmax-Free Transformers (SOFT) are proposed. Specifically, a Gaussian kernel function is adopted to replace the dot-product similarity, enabling a full self-attention matrix to be approximated under low-rank matrix decomposition. For computational robustness, we estimate the Moore–Penrose inverse using an iterative Newton–Raphson method in the forward process only, while calculating its theoretical gradients only once in the backward process. To further expand applicability (e.g., dense prediction tasks), an efficient symmetric normalization technique is introduced. Extensive experiments on ImageNet, COCO and ADE20K show that our SOFT significantly improves the computational efficiency of existing ViT variants. With linear complexity, much longer token sequences are permitted by SOFT, resulting in superior trade-off between accuracy and complexity. Code and models are available at https://github.com/fudan-zvg/SOFT.

Similar content being viewed by others

Data Availability Statement

The datasets generated during and/or analysed during the current study are available in the Imagenet (Deng et al., 2009) (https://www.image-net.org/), COCO (Lin et al., 2014) (https://cocodataset.org), ADE20K (Zhou et al., 2019) (https://groups.csail.mit.edu/vision/datasets/ADE20K/), Cityscapes (Cordts et al., 2016) (https://www.cityscapes-dataset.com), Long Range Arena (Tay et al., 2020) (https://github.com/google-research/long-range-arena) repositories.

References

Ba, J.L., Kiros, J.R. & Hinton, G.E. (2016). Layer normalization. arXiv preprint arXiv:1607.06450

Bello, I. (2021). Lambdanetworks: Modeling long-range interactions without attention. In International conference on learning representations.

Ben-Israel, A., & Cohen, D. (1966). On iterative computation of generalized inverses and associated projections. SIAM Journal on Numerical Analysis, 3(3), 410–419.

Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J. D., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., et al. (2020). Language models are few-shot learners. Advances in Neural Information Processing Systems, 33, 1877–1901.

Chen, K., Wang, J., Pang, J., Cao, Y., Xiong, Y., Li, X., Sun, S., Feng, W., Liu, Z., Xu, J., et al. (2019). Mmdetection: Open mmlab detection toolbox and benchmark. arXiv preprint arXiv:1906.07155

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F. & Adam, H. (2018). Encoder-decoder with atrous separable convolution for semantic image segmentation. In European conference on computer vision (pp. 801–818).

Cheng, J., Grossman, M. & McKercher, T. (2014). Professional CUDA C Programming.

Choromanski, K., Likhosherstov, V., Dohan, D., Song, X., Gane, A., Sarlos, T., Hawkins, P., Davis, J., Mohiuddin, A., Kaiser, L., et al. (2021). Rethinking attention with performers. In International conference on learning representations.

Chu, X., Tian, Z., Wang, Y., Zhang, B., Ren, H., Wei, X., Xia, H., & Shen, C. (2021). Twins: Revisiting the design of spatial attention in vision transformers. Advances in Neural Information Processing Systems, 34, 9355–9366.

Cordts, M., Omran, M., Ramos, S., Rehfeld, T., Enzweiler, M., Benenson, R., Franke, U., Roth, S. & Schiele, B. (2016). The cityscapes dataset for semantic urban scene understanding. In IEEE conference on computer vision and pattern recognition (pp. 3213–3223).

Dai, Z., Liu, H., Le, Q. V., & Tan, M. (2021). Coatnet: Marrying convolution and attention for all data sizes. Advances in Neural Information Processing Systems, 34, 3965–3977.

d’Ascoli, S., Touvron, H., Leavitt, M.L., Morcos, A.S., Biroli, G. & Sagun, L. (2021). Convit: Improving vision transformers with soft convolutional inductive biases. In International conference on machine learning (pp. 2286–2296). PMLR.

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K. & Fei-Fei, L. (2009). Imagenet: A large-scale hierarchical image database. In IEEE conference on computer vision and pattern recognition (pp. 248–255).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. (2019). Bert: Pre-training of deep bidirectional transformers for language understanding. In Conference of the North American chapter of the association for computational linguistics: human language technologies, NAACL-HLT (pp. 4171–4186). Association for Computational Linguistics.

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., et al. (2021). An image is worth 16x16 words: Transformers for image recognition at scale. In International conference on learning representations.

Fasshauer, G. E. (2011). Positive definite kernels: Past, present and future. Dolomites Research Notes on Approximation, 4, 21–63.

Geng, Z., Guo, M.-H., Chen, H., Li, X., Wei, K. & Lin, Z. (2021). Is attention better than matrix decomposition? In International conference on learning representations.

Guo, M.-H., Liu, Z.-N., Mu, T.-J., & Hu, S.-M. (2022). Beyond self-attention: External attention using two linear layers for visual tasks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 45(5), 5436–5447.

He, K., Gkioxari, G., Dollár, P. & Girshick, R. (2017). Mask r-cnn. In IEEE international conference on computer vision (pp. 2961–2969).

He, K., Zhang, X., Ren, S. & Sun, J. (2016). Deep residual learning for image recognition. In IEEE conference on computer vision and pattern recognition (pp. 770–778).

Huang, Z., Wang, X., Huang, L., Huang, C., Wei, Y. & Liu, W. (2019). Ccnet: Criss-cross attention for semantic segmentation. In IEEE international conference on computer vision (pp. 603–612).

Ioffe, S. & Szegedy, C. (2015). Batch normalization: Accelerating deep network training by reducing internal covariate shift. In International conference on machine learning (pp. 448–456). pmlr

Jaegle, A., Gimeno, F., Brock, A., Vinyals, O., Zisserman, A. & Carreira, J. (2021). Perceiver: General perception with iterative attention. In International conference on machine learning (pp. 4651–4664). PMLR.

Kasai, J., Peng, H., Zhang, Y., Yogatama, D., Ilharco, G., Pappas, N., Mao, Y., Chen, W. & Smith, N.A. (2021). Finetuning pretrained transformers into rnns. In Conference on empirical methods in natural language processing (pp. 10630–10643).

Katharopoulos, A., Vyas, A., Pappas, N. & Fleuret, F. (2020). Transformers are rnns: Fast autoregressive transformers with linear attention. In International conference on machine learning (pp. 5156–5165). PMLR.

Kitaev, N., Kaiser, Ł. & Levskaya, A. (2020). Reformer: The efficient transformer. In International conference on learning representations.

Krizhevsky, A., Hinton, G., et al. (2009). Learning multiple layers of features from tiny images.

Lin, T.-Y., Goyal, P., Girshick, R., He, K. & Dollár, P. (2017). Focal loss for dense object detection. In IEEE international conference on computer vision (pp. 2980–2988).

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P. & Zitnick, C.L. (2014). Microsoft coco: Common objects in context. In European conference on computer vision (pp. 740–755). Springer.

Liu, Z., Hu, H., Lin, Y., Yao, Z., Xie, Z., Wei, Y., Ning, J., Cao, Y., Zhang, Z., Dong, L., et al. (2022). Swin transformer v2: Scaling up capacity and resolution. In IEEE conference on computer vision and pattern recognition (pp. 12009–12019).

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S. & Guo, B. (2021). Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 10012–10022).

Liu, Z., Mao, H., Wu, C.-Y., Feichtenhofer, C., Darrell, T. & Xie, S. (2022). A convnet for the 2020s. In IEEE conference on computer vision and pattern recognition (pp. 11976–11986).

Long, J., Shelhamer, E. & Darrell, T. (2015). Fully convolutional networks for semantic segmentation. In IEEE conference on computer vision and pattern recognition (pp. 3431–3440).

Lu, J., Yao, J., Zhang, J., Zhu, X., Xu, H., Gao, W., Xu, C., Xiang, T., & Zhang, L. (2021). Soft: Softmax-free transformer with linear complexity. Advances in Neural Information Processing Systems, 34, 21297–21309.

Maas, A., Daly, R.E., Pham, P.T., Huang, D., Ng, A.Y. & Potts, C. (2011). Learning word vectors for sentiment analysis. In Proceedings of the 49th annual meeting of the association for computational linguistics: Human language technologies (pp. 142–150).

Mindspore: https://www.mindspore.cn/ (2020)

Nangia, N. & Bowman, S.R. (2018). Listops: A diagnostic dataset for latent tree learning. In Conference of the North American chapter of the association for computational linguistics, student research workshop (pp. 92–99).

Peng, H., Pappas, N., Yogatama, D., Schwartz, R., Smith, N.A. & Kong, L. (2021). Random feature attention. In International conference on learning representations.

Radev, D. R., Muthukrishnan, P., Qazvinian, V., & Abu-Jbara, A. (2013). The acl anthology network corpus. Language Resources and Evaluation, 47, 919–944.

Radosavovic, I., Kosaraju, R.P., Girshick, R., He, K. & Dollár, P. (2020). Designing network design spaces. In IEEE conference on computer vision and pattern recognition (pp. 10428–10436).

Sun, S., Yue, X., Bai, S. & Torr, P. (2021). Visual parser: Representing part-whole hierarchies with transformers. arXiv preprint arXiv:2107.05790

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J. & Wojna, Z. (2016). Rethinking the inception architecture for computer vision. In IEEE conference on computer vision and pattern recognition (pp. 2818–2826).

Tay, Y., Dehghani, M., Abnar, S., Shen, Y., Bahri, D., Pham, P., Rao, J., Yang, L., Ruder, S. & Metzler, D. (2020). Long range arena: A benchmark for efficient transformers. In International conference on learning representations.

Tay, Y., Dehghani, M., Bahri, D., & Metzler, D. (2023). Efficient transformers: A survey. ACM Computing Surveys, 55(6), 109–110928.

Touvron, H., Cord, M. & Jégou, H. (2022). Deit iii: Revenge of the vit. In European conference on computer vision (pp. 516–533).

Touvron, H., Cord, M., Douze, M., Massa, F., Sablayrolles, A. & Jégou, H. (2021). Training data-efficient image transformers & distillation through attention. In International conference on machine learning (pp. 10347–10357). PMLR.

Touvron, H., Cord, M., Sablayrolles, A., Synnaeve, G. & Jégou, H. (2021). Going deeper with image transformers. In IEEE conference on computer vision and pattern recognition (pp. 32–42).

Tsai, Y.-H.H., Bai, S., Yamada, M., Morency, L.-P. & Salakhutdinov, R. (2019). Transformer dissection: A unified understanding of transformer’s attention via the lens of kernel. In Conference on empirical methods in natural language processing and international joint conference on natural language processing (EMNLP-IJCNLP) (pp. 4344–4353).

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł. & Polosukhin, I. (2017). Attention is all you need. Advances in Neural Information Processing Systems30

Von Luxburg, U. (2007). A tutorial on spectral clustering. Statistics and Computing, 17, 395–416.

Wang, X., Girshick, R., Gupta, A. & He, K. (2018). Non-local neural networks. In IEEE conference on computer vision and pattern recognition (pp. 7794–7803).

Wang, S., Li, B.Z., Khabsa, M., Fang, H. & Ma, H. (2020). Linformer: Self-attention with linear complexity. arXiv preprint arXiv:2006.04768

Wang, W., Xie, E., Li, X., Fan, D.-P., Song, K., Liang, D., Lu, T., Luo, P. & Shao, L. (2021). Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In IEEE international conference on computer vision (pp. 568–578).

Wang, W., Xie, E., Li, X., Fan, D.-P., Song, K., Liang, D., Lu, T., Luo, P., & Shao, L. (2022). Pvt v2: Improved baselines with pyramid vision transformer. Computational Visual Media, 8(3), 415–424.

Wightman, R. (2019). PyTorch Image Models. https://github.com/rwightman/pytorch-image-models

Williams, C. & Seeger, M. (2000). Using the nyström method to speed up kernel machines. Advances in Neural Information Processing Systems13.

Xiao, T., Liu, Y., Zhou, B., Jiang, Y., Sun, J. (2018). Unified perceptual parsing for scene understanding. In European conference on computer vision (pp. 418–434).

Xie, S., Girshick, R., Dollár, P., Tu, Z., He, K. (2017). Aggregated residual transformations for deep neural networks. In IEEE conference on computer vision and pattern recognition (pp. 1492–1500).

Xiong, Y., Zeng, Z., Chakraborty, R., Tan, M., Fung, G., Li, Y., & Singh, V. (2021). Nyströmformer: A nyström-based algorithm for approximating self-attention. AAAI Conference on Artificial Intelligence, 35, 14138–14148.

Xu, W., Xu, Y., Chang, T. & Tu, Z. (2021). Co-scale conv-attentional image transformers. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 9981–9990).

Yoshida, Y. & Miyato, T. (2017). Spectral norm regularization for improving the generalizability of deep learning. arXiv preprint arXiv:1705.10941

Yuan, Y., Chen, X. & Wang, J. (2020). Object-contextual representations for semantic segmentation. In European conference on computer vision (pp. 173–190).

Yuan, L., Chen, Y., Wang, T., Yu, W., Shi, Y., Jiang, Z.-H., Tay, F.E., Feng, J. & Yan, S. (2021). Tokens-to-token vit: Training vision transformers from scratch on imagenet. In IEEE international conference on computer vision (pp. 558–567).

Yun, S., Han, D., Oh, S.J., Chun, S., Choe, J. & Yoo, Y. (2019). Cutmix: Regularization strategy to train strong classifiers with localizable features. In IEEE international conference on computer vision (pp. 6023–6032).

Zhang, H., Cissé, M., Dauphin, Y.N. & Lopez-Paz, D. (2018). mixup: Beyond empirical risk minimization. In International conference on learning representations.

Zhang, P., Dai, X., Yang, J., Xiao, B., Yuan, L., Zhang, L. & Gao, J. (2021). Multi-scale vision longformer: A new vision transformer for high-resolution image encoding. In IEEE international conference on computer vision (pp. 2998–3008).

Zhang, L., Xu, D., Arnab, A. & Torr, P.H. (2020). Dynamic graph message passing networks. In IEEE conference on computer vision and pattern recognition (pp. 3726–3735).

Zhao, H., Jia, J. & Koltun, V. (2020). Exploring self-attention for image recognition. In IEEE conference on computer vision and pattern recognition (pp. 10076–10085).

Zhao, H., Shi, J., Qi, X., Wang, X. & Jia, J. (2017). Pyramid scene parsing network. In IEEE conference on computer vision and pattern recognition (pp. 2881–2890).

Zheng, S., Lu, J., Zhao, H., Zhu, X., Luo, Z., Wang, Y., Fu, Y., Feng, J., Xiang, T., Torr, P.H., et al. (2021). Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In IEEE conference on computer vision and pattern recognition (pp. 6881–6890).

Zhou, B., Zhao, H., Puig, X., Xiao, T., Fidler, S., Barriuso, A., & Torralba, A. (2019). Semantic understanding of scenes through the ade20k dataset. International Journal of Computer Vision, 127, 302–321.

Zhu, X., Su, W., Lu, L., Li, B., Wang, X. & Dai, J. (2021). Deformable detr: Deformable transformers for end-to-end object detection. In International conference on learning representations.

Acknowledgements

This work was supported in part by STI2030-Major Projects (Grant No. 2021ZD0200204), National Natural Science Foundation of China (Grant No. 62106050 and 62376060), Natural Science Foundation of Shanghai (Grant No. 22ZR1407500) and Lingang Laboratory (Grant No. LG-QS-202202-07).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Jingdong Wang, Phd.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Nyström Method

Nyström method (Williams & Seeger, 2000) aims to calculate a low-rank approximation for a Gram matrix. For Transformers, the self-attention matrix can be viewed as a Gram matrix S with a Gaussian kernel k applied to the query Q, with each element \( S_{ij}\) expressed as:

k(x, y) means operating Gaussian kernel k to (x, y), which can be written in the feature space as:

n is the dimension of a feature space, \(\lambda _i\) denotes the eigenvalue and \(\phi _i\) denotes the eigenfunction of kernel k. According to the eigenfunction’s definition, we can get:

where p(x) is the probability distribution of x. And {\(\phi _i(x)\)} are p-orthogonal:

\(\delta _{ij}\) is 0 when \(i \ne j\), 1 when \(i = j\). To get an approximation of the eigenfunctions, we sample \(\{x_1,x_2,\cdots ,x_q\}\) from p(x), then:

This inspires us to approximate the Gram matrix S. Let \(S^{(m)}\) be a submatrix of S, consisting of \(m \times m\) elements from S. Gram matrix is a symmetric positive semi-definite matrix, so it has a spectral decomposition:

where \(U^{(m)}\) is column orthogonal and \(\Lambda ^{(m)}\) is a diagonal matrix with the diagonal elements as the eigenvalues of \(S^{(m)}\). Substituting the y to \(x_j\) and applying the approximation above to S, we can get:

\(\lambda _i\) is eigenvalue of S and \(\lambda _i^{(m)}\) is the eigenvalue of \(S^{(m)}\). Denote \(\tilde{S}\) as the rank-m approximation of S and \(\tilde{U}, \tilde{\Lambda }\) as the approximation for spectral decomposition of S. Now we can get an approximation of S with rank m:

Similarly, we have:

Thus

Then we get an approximation of S: \(\tilde{S} \approx S_{n,m} S_{m,m}^{\dagger } S_{m,n}\). S has a block representation below:

Appendix B: Newton Method

Proof of Proposition 1

When \(\alpha \) is sufficiently small, \(A_{k+1}=2A_k-A_k A A_k\), \(A_k \) converges to \(A^{\dagger }\). \(\square \)

Proof

A is a symmetric positive semi-definite matrix and \(A_{ij}\le 1\), \(\forall 1\le i,j \le n\), \(A_{ii}=1,\quad 1\le i\le n\) in our case. \(A_0\) is chosen to be \(\alpha A\), so the \(A_k\) can be written as \(A_k=C_k A =AD_k\) for some matrix \(C_k,D_k\), leading to the fact that

This is because \(A_{k+1}=A_k(2I_n - AA_k)=(2I_n - A_kA)A_k\) and \(A_0=\alpha A\). We make a difference between \(A^{\dagger }\) and \(A_{k+1}\):

We norm both sides of the equation above:

And we left multiply A on the both sides of (Eq B16), then norm the equation:

We choose \(\alpha \) sufficiently small so that the initial value satisfy \(\Vert AA^{\dagger }-A A_0\Vert < 1\). We set \(\alpha =\frac{2}{\Vert A\Vert _1^2}\) to ensure it is small enough (Ben-Israel & Cohen, 1966). Then the \(\Vert A A^\dagger - A A_{k}\Vert \rightarrow 0\), when \(k \rightarrow \infty \). The inequality (B17) implies that \(A_k \rightarrow A^{\dagger },\ k \rightarrow \infty \). \(\square \)

Proof of equation 12

Proof

Since \(XY=I\), we take derivatives on the both sides

For the loss function \({\mathcal {L}}\), we have

Recall that the inner product \(\langle A,B\rangle = \text {Tr}(A^\top B)\) and \(\text {Tr}(ABC)=\text {Tr}(CAB)=\text {Tr}(BCA)\), we have

Therefore, Eq (12) is proved. \(\square \)

Appendix C: Attention Normalization

Proof of Porpostion 5

Assume the bottleneck matrix of softmax-free attention, \(A\in {\mathbb {R}}^{m\times m}\) is k-connected. If \(\lambda _1\ge \lambda _2\ge \cdots \lambda _m \ge 0\) are eigenvalues of \(A^\dag \), then \(\lambda _1 = {\mathcal {O}}(m^2)\) and \(\Vert A^\dag \Vert _2 = {\mathcal {O}}(m^2)\). \(\square \)

To prove Proposition 5, we first introduce the following lemmas.

Lemma 1

If F is a non-singular and symmetric matrix, then \(\Vert F\Vert _2\le \Vert F\Vert _1\).

Proof

According to the definition of 2-Norm, we have \(F^TFz=\mu ^2z\) with \(\mu = \Vert F\Vert _2\). Therefore, \(\mu ^2\Vert z\Vert _1 = \Vert F^TFz\Vert _2\le \Vert F^T\Vert _1\Vert F\Vert _1\Vert z\Vert _1 = \Vert F\Vert _{\infty }\Vert \Vert F_1\Vert z\Vert _1\). Since A is non-singular and symmetric,

\(\square \)

Lemma 2

If F is a real matrix and \(\Vert F\Vert _p < 1\), then

with

Proof

Since \(\Vert F\Vert _p<1\), \(\lim _{k\rightarrow \infty } F^k=0\). Thus,

Multiply the equation by \((I-F)^{-1}\) we obtain Eq (C20). From that, we can easily get

\(\square \)

Now we begin to prove Proposition 5.

Proof

Since the bottleneck matrix softmax-free attention \(\Vert A\Vert _1\) is not less than 1, we normalize the matrix as \(A_n = D^{-1}A\), where \(D = \text {diag}(A\mathbb {1}_m)\). From Lemma 2, it follows that

Since we assume the bottleneck matrix is k-connected, and \(k<<m\),

Then,

Note that we \(D={\mathcal {O}}(m)\) by assumption that the bottleneck matrix is k-connected, it follows that

\(\square \)

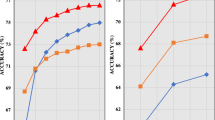

Comparison of attention heatmaps for a selected query patch (indicated by a cross "+") against all patches in an image. Heatmaps are derived from the first head’s corresponding row in the attention maps, as calculated by Eq. 17. These heatmaps are normalized to a 0–1 scale, with warmer colors indicating higher relevance. The model variants compared are: a Transformer (Vaswani et al., 2017), b Performer (Choromanski et al., 2021), c Nystromformer (Xiong et al., 2021), and d Our SOFT approach

Appendix D: Non-linearized Gaussian Kernel Attention

Instead of directly calculating the Gaussian kernel weights, in our formulation they are approximated. More specifically, the relation between any two tokens is reconstructed via sampled bottleneck tokens. As the number m (e.g., 49) of bottleneck tokens is much smaller than the entire token sequence length, our attention matrix is of low-rank. This brings about two favorable consequences: (I) The model now focuses the attentive learning on latent salient information captured by the m bottleneck tokens. (II) The model becomes more robust against the underlying token noise due to the auto-encoder style reconstruction (Geng et al., 2021). This explains why a model with an approximated gram matrix performs better than one with a directly computed matrix. Further, we find that exact Gaussian kernel attention leads to training difficulties. As Proposition 4 reveals, this is due to lacking normalization that leads to explosion of the spectral norm especially in long token sequence cases. Big spectral norm could jeopardize the training and tend to collapse the model.

Appendix E: Attention Visualization

Figure 7 shows more visualization of the attention masks by various Transformers (Vaswani et al., 2017; Choromanski et al., 2021; Xiong et al., 2021) and our SOFT. For each model, we show the output from the first two attention heads (up and down row). It is noteworthy that SOFT exhibits better semantic diversity of the multi-head mechanism than other methods. Moreover, when we sample the patch at the boundary of multiple objects, SOFT is able to more precisely capture all these objects.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lu, J., Zhang, J., Zhu, X. et al. Softmax-Free Linear Transformers. Int J Comput Vis (2024). https://doi.org/10.1007/s11263-024-02035-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11263-024-02035-5